UH Hilo students improve AI for live fish tracking, more

This summer, five students from the University of Hawaiʻi at Hilo worked with a computer scientist to investigate various research problems in human-in-the-loop artificial intelligence (AI). It is a branch of AI that combines human and machine intelligence to create machine learning models. The class was led by Travis Mandel, assistant professor of computer science, and sponsored by the National Science Foundation. Students were paid a stipend to participate.

“The students have been working really hard and have some interesting work to present,” said Mandel. On July 30, the students virtually presented their group projects.

Human-AI collaboration in fish tracking

Students Chris Hanley and Meynard Ballesteros developed a cross-platform app linked to detecting and tracking fish. It allows anyone to run fish detectors on live video from their webcam and on pre-collected dive videos.

According to the project’s abstract, existing pipelines have been built that can accurately detect and track fish in real-world video collected by divers off the coast of Hawaiʻi Island, but none are equipped to guide data collection in the field.

Over the summer, the students trained with a wide variety of object detection network architectures on publicly available fish data.

The budding computer scientists explain that in order to be useful to divers in the field, object detection and tracking need to occur on mobile devices rather than a desktop computer or server.

In the project abstract they explain, “we instrumented our app to report key accuracy and timing metrics, which we compared to desktop performance, finding unexpected dissimilarities. Finally, we explore avenues for running tracking in real-time alongside detections.”

They hope the app will lay a foundation for a new paradigm on how humans and computer vision systems can interact during real-time data collection.

- Related UH News story: AI student research conducted in the age of COVID-19, August 12, 2020

Improving human-in-the-loop AI

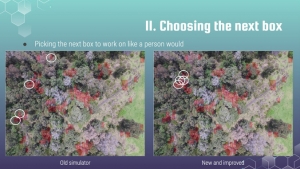

Students Keane Nakatsu, Kaʻimi Beatty and Jaden Kapali worked on a project evaluating a human-in-the-loop annotation interface. They created a wide variety of new simulators to better reflect the great diversity of human behavior during the annotation process.

“Our streamlined evaluation approach allowed us to easily evaluate how various different features, including how the AI reacts to user behavior, animations, etc. affect annotation performance in a rigorous and quantitative way,” explained the students in their summary. “We are also able to easily create and evaluate newer and more complex algorithms to assist humans during the annotation process.”

The students applied deep learning methods to detect novel objects that typically require acquiring a large dataset of annotated training data.